When most people think of Google DeepMind, they picture world-class breakthroughs like AlphaGo, AlphaFold, or Gemini. But in September 2025, DeepMind revealed another major leap: next-generation robotics AI models designed to give robots the ability to perform complex, real-world tasks with unprecedented flexibility and intelligence.

From sorting laundry to classifying recyclables, these new models are a glimpse into the future of human–robot collaboration. Let’s unpack what they are, why they matter, and how they could transform industries worldwide.

What Are DeepMind’s Robotics AI Models?

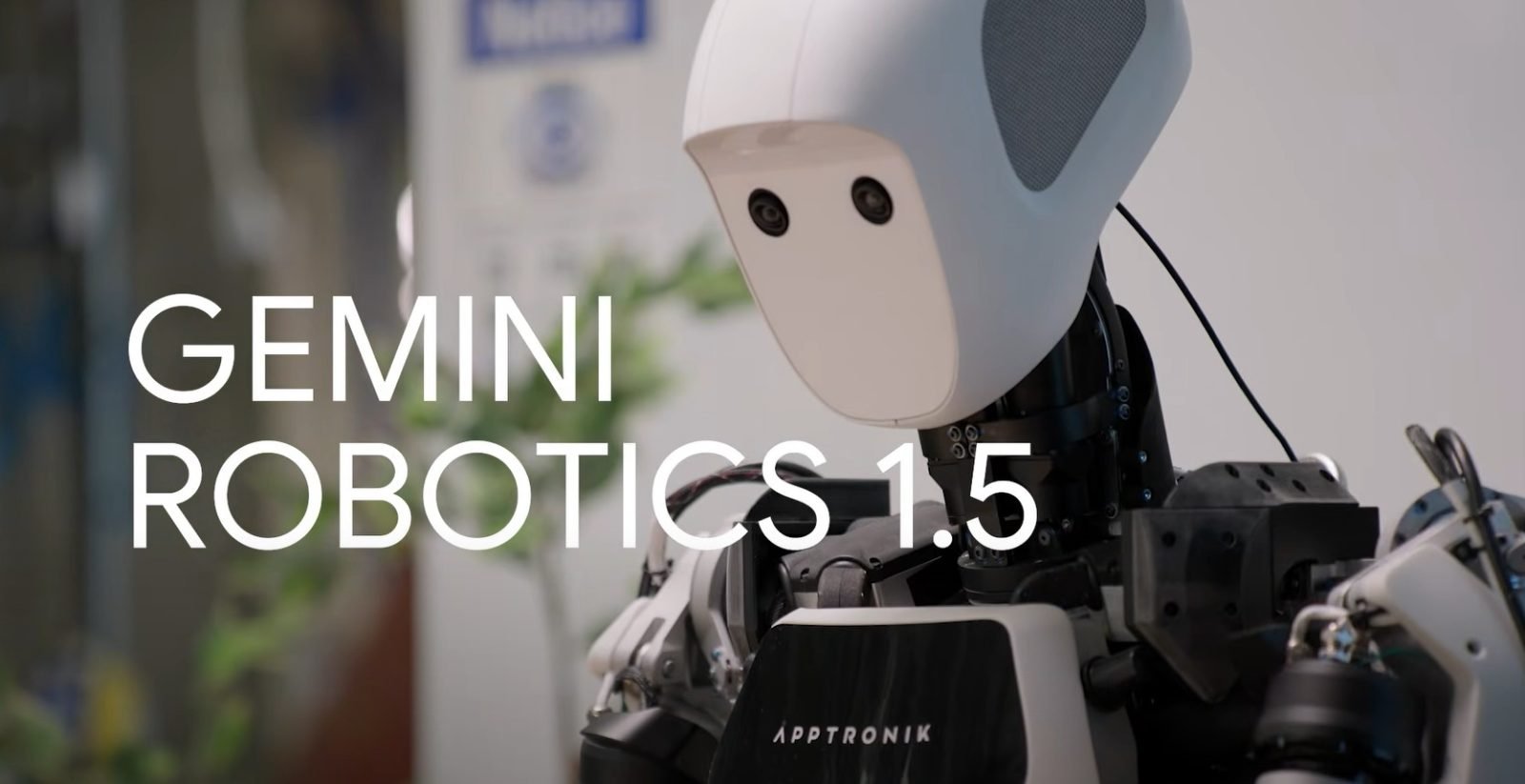

DeepMind’s new robotics suite, unveiled in late September 2025, builds on the Gemini AI architecture and combines vision, planning, and control systems into a unified model.

In simple terms, these models allow robots to:

- See and understand their environment (computer vision).

- Plan sequences of actions to achieve a goal (AI reasoning).

- Physically act with precision using robotic arms or mobile units (control systems).

The result? Robots that are not only capable of performing pre-programmed tasks but can adapt on the fly to new situations.

Key Features of DeepMind’s Robotics AI

- Multimodal Intelligence

- Integrates visual inputs (cameras), text instructions, and physical feedback.

- Example: Tell the robot “sort the colored clothes into this basket,” and it executes, even if the setup changes.

- Integrates visual inputs (cameras), text instructions, and physical feedback.

- Generalization Beyond Training

- Unlike older robotics systems that required narrow training, these models can handle new environments and tasks with minimal retraining.

- Unlike older robotics systems that required narrow training, these models can handle new environments and tasks with minimal retraining.

- Task Diversity

- Early demos include sorting laundry, separating recyclables, stocking shelves, and simple cooking prep tasks.

- Early demos include sorting laundry, separating recyclables, stocking shelves, and simple cooking prep tasks.

- Safety & Adaptability

- Built-in safety layers to prevent harmful or unintended actions.

- Ability to learn from mistakes and adjust strategies.

- Built-in safety layers to prevent harmful or unintended actions.

How Do They Compare to Previous Robotics Efforts?

Historically, robots were either:

- Industrial robots: Very fast, precise, but locked into repetitive assembly-line tasks.

- Research robots: Flexible but impractical outside lab settings.

DeepMind’s new models bridge this gap by combining AI reasoning with physical control:

| Feature | Traditional Robots | DeepMind Robotics AI |

| Flexibility | Low | High |

| Adaptability | Minimal | Real-time |

| Training | Task-specific | Generalizable |

| Use Cases | Manufacturing | Homes, warehouses, hospitals, recycling plants |

Why This Matters Globally

The launch of DeepMind’s robotics AI could impact multiple industries:

- Healthcare: Robots assisting in elder care, patient support, or supply handling.

- Retail: Automated shelf-stocking, inventory checks, and logistics.

- Recycling & Sustainability: Smarter waste sorting, reducing landfill overflow.

- Hospitality: Food prep, cleaning, and delivery assistance.

- Homes: Daily chores like laundry folding, dish loading, or tidying.

By giving robots the ability to adapt, the models lower barriers to adoption outside controlled factory environments.

Example Use Cases

- Laundry Folding Robots

- Can distinguish between shirts, socks, and trousers, even when crumpled.

- Can distinguish between shirts, socks, and trousers, even when crumpled.

- Recycling Stations

- Recognize plastics, metals, and paper, improving waste separation accuracy.

- Recognize plastics, metals, and paper, improving waste separation accuracy.

- Smart Warehousing

- Pick and place items dynamically, even when packages vary in size or placement.

- Pick and place items dynamically, even when packages vary in size or placement.

- Hospital Assistance

- Fetching supplies or guiding patients without needing exact pre-programming.

- Fetching supplies or guiding patients without needing exact pre-programming.

Challenges & Ethical Considerations

While promising, these robotics AI models come with challenges:

- Job Displacement: Automation in warehouses and retail could replace certain human roles.

- Safety Concerns: Robots acting in public spaces need robust safeguards.

- Bias & Training Data: Vision and reasoning models must avoid errors that could cause harm.

- Cost of Deployment: Early models may be too expensive for mass adoption.

DeepMind has emphasized a “responsible rollout”, working with partners in healthcare and sustainability first, before mainstream consumer adoption.

How They Fit Into DeepMind’s Bigger Picture

These robotics models aren’t standalone, they’re part of a Gemini-powered ecosystem where reasoning, vision, and language all converge.

- Gemini for reasoning: Provides planning capabilities.

- Vision AI: Allows robots to “see” and understand surroundings.

- Control AI: Executes fine-motor actions reliably.

Together, this creates AI agents that can move through the physical world intelligently, not just digital spaces.

Google DeepMind’s robotics AI models are more than just an experiment, they represent a major leap toward practical, adaptive robots that can function in everyday settings.

Whether it’s folding laundry, helping in hospitals, or making recycling more efficient, these models could redefine how humans interact with machines.

The big question isn’t if they’ll change our lives, but how fast industries, policymakers, and society are ready to integrate them.

One thing is certain: September 2025 will be remembered as the month when AI moved further from screens and servers into the physical world around us.